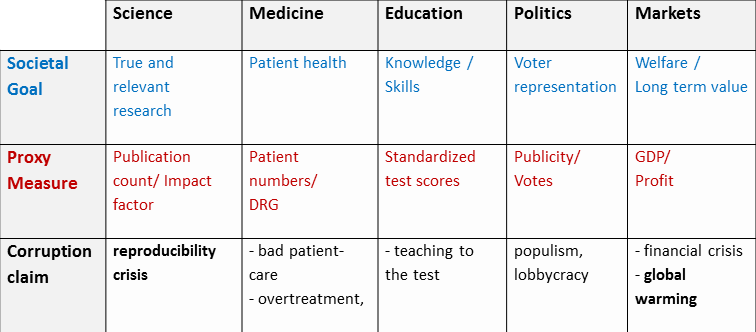

The purpose of this site is to present what I (Oliver Braganza) call Proxyeconomics. It highlights that whenever we as humans try to practically achieve some abstract goal (see table below), we almost inevitably depend on imperfect proxy measures of this goal, and that this has profound practical and ethical consequences. Specifically, the proxies tend to become targets in themselves, undermining the original (or purported) goals, without us even noticing. The result can be proxy-economies, where increasing amounts of effort and resources serve some proxy measure, which has fully decoupled from the goal it was supposed to serve. Ecological and welfare economists are increasingly making the case that this seems to quite accurately describe modern western economies, where exponentially increasing economic output and environmental damage is producing statistically negligible welfare gains. The present theory suggests that this is is in fact an almost inevitable result of the unreflective use of proxy measures, such as profit or consumption, in economic (or any other) competition.

While I’ll try to avoid jargon here, there are several peer reviewed papers developing the general theory (e.g. RSOS, 2022), or implications in specific contexts (e.g. PloS1, 2020, CogSci, 2022). I’d like to particularly recommend a recent article in the Behavioral and Brain Sciences (BBS, 2023). It highlights the ubiquity of the phenomenon in not only human but even natural systems, suggesting an even more general theory of Proxynomics. The present site is about the human, or social, phenomenon.

In a nutshell.

Proxyeconomics could be called a theory of Campbell’s (or Goodhart’s) law in competitive societal systems. The central insight is that any competitive societal system depends on proxy measures to mediate competition. Goodhart’s Law states that ‘When a measure becomes a target, it ceases to be a good measure’. Putting both together leads to the ‘central conjecture’ of proxyeconomics:

‘Any proxy measure in a competitive societal system becomes a target for the competing individuals, promoting corruption of the measure’.

In all these fields there are prominent voices arguing that the measures, or the actions and practices of competing individuals, are corrupted. Consider the following examples:

What binds all these diverse corruption claims together is proxy failure. In all these cases, the proxy measures become targets for the competing individuals (or groups). And in all these cases authoritative voices are claiming that competition is having adverse effects, supporting their claims with substantial, often cringe-worthy, evidence (see below). However, raising the topic in any specific context can quickly lead to heated discussions. Often the crucial questions remain: ‘Are the proxy measures really corrupted? or How corrupted are they? and Is there any way to counteract corruption?’ Trying to answer these questions is extremely important and notoriously difficult. This site attempts to draw together numerous different disciplinary perspectives, ranging from information/complexity theory via psychology to economics and sociology. Importantly, every aspect I discuss derives from the central conjecture stated above. The driving insight is, that when we try to optimize something in a complex environment, we get Goodhart’s Law. How and why this is remains unclear.

I believe the resulting theory of corruption in competitive systems will allow us to understand the underlying mechanisms, interpret the evidence and make testable predictions. It will allow us to investigate when and how proxy measures get corrupted and what we might do against it.

Introduction

Competition is a way to get stuff done. It provides the necessary motivation to focus and to organize. It’s also a way to select the most qualified people to the appropriate positions. Economists would say, it’s an ideal way to allocate scarce resources. While, the theory of proxyeconomics talks mostly about negative side-effects of competition, this is not meant to imply that the above statements are wrong. It does imply that they probably almost always have to be taken with a grain of salt. Let me restate:

‘Any proxy measure in a competitive societal system becomes a target for the competing individuals, promoting corruption of the measure’.

Whether or not the system gives in to the corruptive pressure is another question. The first goal of the theory of proxyeconomics is to provide the tools to assess when this happens, or in which societal systems. Perhaps the best starting point for this is to acknowledge a crucial difficulty of the theory, namely that the societal goal is often difficult to quantify or even define precisely, as discussed here. This caveat is critical when assessing the qualitative and quantitative evidence of proxy-corruption reviewed here. Importantly, in a competitive societal system, we ultimately have individuals making decisions and these individuals may factor in the competitive realities as well as other personal or social values such as professional/moral considerations. How this may occur is studied by psychologists, economists, behavioral ethicists and most recently neuroscientists (reviewed here). A part of this question is so complex that it merits it’s own section, namely the psychological effects of competition (reviewed here). Finally, there are effects of competition which are beyond an individuals power, namely selection. Given that the complex ways in which we do things is in large part learned and transmitted in a cultural context, selection is likely to cause a form of cultural evolution (reviewed here). Together, I hope, these considerations will create a better understanding of the potential mechanisms behind proxy-orientation and can help to diagnose a system. But they also suggest systematic ways of improving upon a suboptimal system (reviewed here) leading to specific policy implications (reviewed here).

One particular case of interest is of course the economic system as a whole. Might our world economy be creating ‘corrupted’ practices which maximize wealth production but don’t actually increase human welfare. A lot of recent research certainly seems to make this case, at least for industrialized nations. The question seems even more pressing in the light of global warming, where a particular aspect of the theory, namely the potential for lock-in effects, may partially explain our inaction. A lock-in effect is produced in a proxy economy, when no individual actor can break out of proxy orientation because selection will immediately remove her from the system. So the stakes are high, and a scientific framework, able to explain confusing inconsistencies and make predictions may be worth a lot.

Perhaps one last thing should be mentioned at the outset. This is neither an argument against competitive societal systems nor proxy measures in general. By and large, I believe both have been and are essential components of human progress and welfare. Part of the reason is of course the effort-incentivizing and resource-direction effect of competition. While most of this theory is about where this can go wrong, we should keep in mind that it often seems to work well. In fact there is a reason why I belive proxy-based competiotion, by and large, works well. The reason is, that consientious individuals constantly work at monitoring and correcting instances where the proxy has gone wrong. Indeed, it may be the corruption induced by proxy-based competition, that drives the creation of norms and institutions in a spiral of genuine progress. But if society starts blindly serving self-reinforcing proxy measures, then they can become an end in themselves.

The information gradient

In any ‘proxy based competition in a societal system’ an information gradient arises invariably through the measurement process. The societal goal is, by definition, what we want to achieve as a society, so it contains all the information. We might say the societal goal of the healthcare system is to maximize patient health. Now, defining what this really means in detail is extremely difficult, and may be the ever changing result of an ongoing societal discussion. The important thing to realize is that whatever it may mean, when we try to measure it we will a) lose some information and b) measure some things which we didn’t intend to measure. An instructive analogy is to machine learning, which has provided some excellent recent information theoretic treatments of this subject 1,2. In both societal systems and machine learning problems one collects information in order to optimize an unknown function (goal). In machine learning one now creates model which collects all the information and transforms it to an objective function (proxy). The model performs the task of weighting all the information, deciding what factors in positively and what negatively and in which mathematical form (e.g. linear, exponential, logarithmic, etc…). It ultimately provides a low dimensional metric to decide what’s good and what’s not. The same is done by a competitive societal system, for instance through the publication process, peer-review, etc. Though, the societal system may have more heterogeneous, more dynamic and less formal mechanisms to create the proxy, it must ultimately provide a performance ranking of competitors, i.e. a low dimensional metric. What David Manheim and Scott Garrabant have christened a ‘Goodhart phenomenon’, and I have been simply calling corruption, now occurs whenever this metric is used for decision making, such as selection 1,3. The first important insight is that corruption is likely to occur for a variety of purely statistical reasons. Manheim and Garrabant have categorized four classes of ‘Goodhart’ phenomena, only one of which involves any gaming or mal intent, and I highly recommend their overview. The second important insight is that corruption is likely to occur proportional to control/optimization pressure. Importantly, this holds for statistical as well as intentional Goodhart phenomena.

In a competitive societal system, all four classes of Manheim and Garrabant’s Goodhart Phenomena are likely to occur simultaneously and at multiple levels. Optimization pressure roughly corresponds to competitive pressure. So there are many reasons to assume a general misalignment between proxy and goal. In particular, creating a good proxy measure (or objective function) requires money and time. For instance, in science you could always add more reviewers to assess a submitted paper, or perhaps even require an independent reproduction of the key results. Though this would certainly increase the information about the scientific value of a given publication, it is just too costly to do. So we generally have to accept quite imperfect proxies. What this suggests, is that the competitive pressure in a system should match the proxy fidelity. Too little competitive pressure may lead to underincentivization and resource misallocation. But too much competitive pressure will likely lead to effort and resource misallocation, i.e. Campbell’s law.

The epistemic gradient

While we are thinking about information, it is instructive to consider the types of information that are most likely to be lost by the proxy. I call this part of the problem the epistemic gradient, because we must expect a quite systematic difference in how and how well we can know different aspects of the societal goal at any given time. Specifically, corruption will tend to always affect those aspects of a societal goal that are difficult to assess or define. Otherwise they would probably have been incorporated into the proxy.

At first glance this is a problem, because I’m saying there is probably corruption, but we won’t be able to measure it. Any theory, that makes grand claims but contains a passage about how you won’t be able to prove them is justifiably suspect. But luckily, corruption will only affect those specific aspects that are currently part of the proxy. For instance, alternative measures, which become available beyond the timeframe of competition such as scientific reproducibility, environmental costs or financial risks will not be incorporated into the proxy, and thus will remain good measures. Accordingly, we can use these alternative quantitative measures to assess potential problems with the proxy. Indeed, we can construct detailed empirically grounded models of how proxies are actually created and make precise predicions about alternative proxies or patterns of outcomes that would occur in case of proxy orientation. (I have collected some examples in the evidence section).

One important point to note is that this epistemic gradient suggests systematic roles for qualitative and quantitative approaches within science. As discussed above, proxies are often attempts to reduce complex multidimensional goals to simple scalar metrics, i.e. to quantify. Any aspects of the societal goal that are difficult to quantify are likely to be preferentially lost in the proxy. This suggests a systematic role for qualitative research in determining proxy orientation. Ultimately, such qualitative research may lead to novel quantitative measures. However, it is important to remember, that as soon as these measures are incorporated into the proxy, they too are susceptible to corruption. Furthermore, some aspects of reality may be simply difficult to quantify. For all these reasons, qualitative findings may be ideal sentinels for potential excessive proxy orientation. For instance surveys can monitor the subjective assessments of individuals within a respective societal system 4. A relevant metric might be if professionals feel competitive pressures interfere with their moral preferences, or indeed if they systematically avoid considering if this might be the case. For instance recent sociological work has addressed the valuation practices of young researchers, and describes a pattern consistent with excessive proxy orientation 5. Briefly, the authors find a systematic shift of valuation practices from heterarchical, socially or morally oriented practices toward hierarchical, competition oriented practices. In other words, individuals stop valuing social and moral goals and start caring only about how well they are doing in competition. Such a pattern is consistent with a broad reorganization of cultural practices toward proxy measures. In general, the qualitative pattern must indicate practices that are beneficial to the proxy but irrelevant or detrimental to the actual societal goal.

Finally, all this has an important implication for individuals who are ultimately making decisions within the competitive societal system. For them, the implications of any action for the goal are likely to be systematically more ambiguous than the proxy. A scientist may not be sure which statistical model is ultimately more appropriate, but she is more likely to know, which one produces a significant result and allows a high impact publication.

So in summary, the proxy will contain less information than the goal, it will be less complex (capture fewer dimensions and aspects) and it will be less ambiguous. The aspects of the societal goal which may suffer from corruption are almost by definition those aspects which are most difficult to define and quantify. More generally, these are the types of problems that arise when you try to control a complex adaptive system 6.

-

Manheim, D. & Garrabrant, S. Categorizing Variants of Goodhart’s Law. (2018).

-

Amodei, D. et al. Concrete Problems in AI Safety. (2016).

-

Smaldino, P. E. & McElreath, R. The natural selection of bad science. R. Soc. Open Sci. 3, 160384 (2016).

-

Baker, M. 1,500 scientists lift the lid on reproducibility. Nature 533, 452–454 (2016).

-

Fochler, M., Felt, U. & Müller, R. Unsustainable Growth, Hyper-Competition, and Worth in Life Science Research: Narrowing Evaluative Repertoires in Doctoral and Postdoctoral Scientists’ Work and Lives. Minerva 54, 1–26 (2016).

-

Flake, G. W. The computational beauty of nature : computer explorations of fractals, chaos, complex systems, and adaptation. (MIT Press, 1998).

Evidence

In the section about the information gradient (above), I have described why it may not be straight forward to detect a proxyeconomy (i.e. a societal system excessively oriented towards a proxy), and why qualitative evidence is essential. In a nutshell, most aspects that can be easily defined, understood and measured, are likely to be incorporated into the proxy. Indeed, much of the available evidence on proxy-orientation is qualitative, found in editorials, expert testimony or surveys. But it is also important to distill out some quantitative predictions of the theory. What is important to realize is that what is incorporated into a proxy depends on a variety sometimes quite arbitrary factors, and the process of proxy creation can be readily dissected. For instance, the generation of a scientists ‘track record’ depends crucially on publications. A simple analysis of journals evaluation criteria reveals that they largely favor positive and unexpected results. Accordingly, proxy-orientation of scientific practices should entail biases that increase positive and unexpected results. If these practices at the same time decrease scientific validity, then this is a clear indication of proxy orientation. Therefore, if a system starts revolving around the proxy measure, then we should expect clear quantitative and qualitative indicators of this.

Quantitative evidence of proxy orientation can be divided into two general categories:

- The comparison of the proxy measures which mediate competition to alternative proxy measures which play a lesser role. Both measures will imperfectly represent the actual societal goal, but only one is susceptible to corruption, so we can predict a divergence between the two measures if corruption exists. Often such ‘alternative proxy measures’ become available on a slower timescale than would be feasible to implement in competition, or they are too costly to evaluate regularly. For instance publication output can be compared to reproducibility measures.

- Investigation and modeling of individual cultural practices. If an entire system has really become corrupted, then a careful analysis of individual prevalent cultural practices should reveal a clear proxy orientation. This may be immediately clear (as in sabotage) or be hidden in more intricate patterns. For instance empirically selected sample sizes can be analyzed with respect to their theoretical implications for both publication probability and reproducibility.

In the following I have tried to collect evidence from both categories plus some of the qualitative evidence organized by societal system. I fear the result is somewhat biased to scientific systems, but that’s just what I know most about.

Science

Quantitative experimental science is an ideal research object for the theory of proxyeconomics. This is because apart from the proxies relevant in competition (publication count, impact factor, institutional affiliation, etc.) there is a wealth of quantitative parameters which become visible only in the long run (reproducibility rates, effect sizes in meta-analyses, etc.). At the same time many specific ‘cultural practices’ can be analytically modeled (choice of sample size, publication practices, etc.) with specific predictions for parameters from both domains.

Quantitative evidence of proxy association

In science, practices that increase the number/proportion of false positive findings conform to the pattern of proxy orientation. Such practices will increase the number of publishable results, but decrease the average validity of these results, as well as reproducibility. Depending on your viewpoint false positive publications have either no real scientific value or negative value, since they can incur substantial downstream costs. In the following I review some recent reports of scientific practices that will increase false positive rates. Sometimes the practices are shown to be associated with correlates of competitivity, and generally they are shown to be widely practiced.

- Despite evidence for widespread cross-contamination of mammalian cell lines, culturists only infrequently test for cross-contamination 1,2. This is particularly confusing since the cost of testing is low and the presence of cross-contamination compromises the conclusions of any particular study 3.

- A recent study investigating the statistical inferences from fMRI research found substantial levels of false positives (up to 70%) in null data, suggesting a widespread methodological bias. The effect was systematic, similar across all major analysis platforms and likely affects thousands of studies 4.

- Antibodies are infrequently validated 5.

- Meta-analyses in diverse fields suggest, that scientists overwhelmingly choose too small sample sizes 6,7.

- Practices such as randomization, blinding or sample size calculations are infrequently used, even in high impact journals and prestigious institutions 8,9. This is particularly surprising since high impact journals implement a more rigid and selective editorial process which is generally expected to lead to higher quality. Notably, the prevalence of reported randomization was even negatively correlated to impact factor 9.

- Similarly, Munafò (2009) find that the first (usually small and underpowered study) of an effect is frequently published in high impact journals while subsequent (higher powered and therefore more scientifically meaningful and more time consuming studies) are published in journals of much lower impact 10.

- Positive publication bias correlated with competitivity 11

- Choice of exploratory research 12.

Qualitative evidence (surveys, expert testimony, reviews, editorials):

- Widespread perceived decrease in trust in scientific publications 13,14

- Various bad scientific practices are endemic and are widely perceived to directly result from competitive pressure 15,16

- Horton (2002) surveyed authors to establish if their views are accurately represented in their publications. They found self-censorship of criticism and no reflection of the diversity of opinions about the importance and implications between co-authors 17.

- Changes in valuation practices towards competitivity and away from sociability and scientific merit 15

Stakeholders such as editors or reviewers are similarly subject to competition potentially compounding the problem. reviewed in an editorial on conflicts of interest by 18:

- ‘an editor who selects for publication a psychiatric genetic study with a relatively small sample size and a level of significance not much better than 0.05 over an innovative and methodologically sound manuscript dealing with a topic that is not currently popular may be helping to enhance the impact factor of the journal at the expense of its scientific quality.’ Cited from 18

- 19 analysed impact factor trends and interviewed editors and concluded that rising impact factors were due to deliberate editorial practices in spite of the editors’ dissatisfaction with impact factors as the measure of the quality of a journal. (cited from 18

- 20 an average of 2.6 of 9 major errors in test manuscripts in BMJ were detected by reviewers, and this number was not improved after reviewer training.

- ‘An important COI for reviewers is the conflict between the professional obligation to produce a well thought-out review in a timely manner and the desire not to spend too much time on a task that is relatively thankless.’ Cited from 18

- Financial conflicts of interest 21,22. Note, that here the driver of proxy-orientation (e.g. selective publication) comes from competitive pressures outside the scientific system, namely economic pressures.

Sports

- Dilger and Tolsdorf present a simple economic model predicting a positve relation between competitivity and doping in athletic tournaments. They corroborate this prediction by presenting empirical evidence of a correlation between the GINI-index and doping incidence between several athletic disciplines. 23

Medicine

- Systematic review on COI of doctors 24

- Examples of Campbell’s law in Medicine 25

- Detailed analysis of biased statistics, and biased statistical representation 26

Business

Benabou and Tirole present a model and compile supporting qualitative evidence on the firm level 27.

A report by Stiglitz, Sen and Fitoussi on GDP explores all the ways in which it as a compound measure of economic productivity mismeasures societal wellbeing 28

Similarly, at the nation level, Wilkinson and Pickett present evidence showing a distinct lack of correlation between GDP and measures of human wellbeing within industrialized nations 29. Importantly, what does (negatively) correlate with most measures of wellbeing is inequality.

1 Smaldino, P.E. and McElreath, R. (2016) The natural selection of bad science. R. Soc. Open Sci. 3, 160384

2 Bekelman, J.E. et al. (2003) Scope and Impact of Financial Conflicts of Interest in Biomedical Research. JAMA 289, 454

3 Pham-Kanter, G. (2014) Revisiting financial conflicts of interest in FDA advisory committees. Milbank Q. 92, 446–70

4 Higginson, A.D. and Munafò, M.R. (2016) Current Incentives for Scientists Lead to Underpowered Studies with Erroneous Conclusions. PLOS Biol. 14, e2000995

5 Buehring, G.C. et al. (2004) Cell line cross-contamination: how aware are Mammalian cell culturists of the problem and how to monitor it? In Vitro Cell. Dev. Biol. Anim. 40, 211–5

6 Capes-Davis, A. et al. (2010) Check your cultures! A list of cross-contaminated or misidentified cell lines. Int. J. Cancer 127, 1–8

7 Freedman, L.P. et al. (2015) The Economics of Reproducibility in Preclinical Research. PLOS Biol. 13, e1002165

8 Eklund, A. et al. (2016) Cluster failure: Why fMRI inferences for spatial extent have inflated false-positive rates. Proc. Natl. Acad. Sci. 113, 7900–7905

9 Baker, M. (2015) Reproducibility crisis: Blame it on the antibodies. Nature 521, 274–276

10 Button, K.S. et al. (2013) Power failure: why small sample size undermines the reliability of neuroscience. Nat. Rev. Neurosci. 14, 365–76

11 Crossley, N.A. et al. (2008) Empirical evidence of bias in the design of experimental stroke studies: a metaepidemiologic approach. Stroke. 39, 929–34

12 Macleod, M.M.R. et al. (2015) Risk of Bias in Reports of In Vivo Research: A Focus for Improvement. PLOS Biol. 13, e1002273

13 Munafò, M.R. et al. (2009) Bias in genetic association studies and impact factor. Mol. Psychiatry 14, 119–20

14 Butler, L. (2005) What Happens When Funding is Linked to Publication Counts? – Handbook of Quantitative Science and Technology Research, 2005, pp 389-405, Springer Netherlands.

15 Fanelli, D. (2010) Do Pressures to Publish Increase Scientists’ Bias? An Empirical Support from US States Data. PLoS One 5, e10271

16 Siebert, S. et al. (2015) Overflow in science and its implications for trust. Elife 4,

17 Alberts, B. et al. (2015) Opinion: Addressing systemic problems in the biomedical research enterprise. Proc. Natl. Acad. Sci. 112, 1912–1913

18 Fochler, M. et al. (2016) , Unsustainable Growth, Hyper-Competition, and Worth in Life Science Research: Narrowing Evaluative Repertoires in Doctoral and Postdoctoral Scientists’ Work and Lives. , Minerva, 54, Springer, 1–26

19 Anderson, M.S. et al. (2007) What do mentoring and training in the responsible conduct of research have to do with scientists’ misbehavior? Findings from a National Survey of NIH-funded scientists. Acad. Med. 82, 853–60

20 Horton, R. (2002) The hidden research paper. JAMA 287, 2775–8

21 Young, S.N. (2009) Bias in the research literature and conflict of interest: an issue for publishers, editors, reviewers and authors, and it is not just about the money. J. Psychiatry Neurosci. 34, 412–7

22 Chew, M. et al. (2007) Life and times of the impact factor: retrospective analysis of trends for seven medical journals (1994-2005) and their Editors’ views. J. R. Soc. Med. 100, 142–50

23 Schroter, S. et al. (2008) What errors do peer reviewers detect, and does training improve their ability to detect them? J. R. Soc. Med. 101, 507–14

24 Dilger, A. and Tolsdorf, F. (2010) Doping und Wettbewerbsintensit?t. Schmollers Jahrb. 130, 95–115

25 Spurling, G.K. et al. (2010) Information from pharmaceutical companies and the quality, quantity, and cost of physicians’ prescribing: a systematic review. PLoS Med. 7, e1000352

26 Poku, M. (2016) Campbell’s Law: implications for health care. J. Health Serv. Res. Policy 21, 137–9

27 Bénabou, R. and Tirole, J. (2016) Bonus Culture: Competitive Pay, Screening, and Multitasking. J. Polit. Econ. 124, 305–370

28 Stiglitz, J.E. et al. (2010) Mismeasuring our lives : why GDP doesn’t add up : the report, New Press.

29 Wilkinson, R.G. and Pickett, K. (2009) Wilkinson, Richard and Kate Level: Why More Equal Societies Almost Always Do Better. Allen Lane

Psychological aspects

The question of how individuals in such complex societal systems make decisions is obviously daunting. Clearly it will depend heavily on what system we are actually talking about. The professional ethos, character types and values in medicine, science, politics or business may differ dramatically. However, there are also some regularities that can inform how individuals faced with a proxy based competition may react. The differences between the societal systems mentioned above can then be used to inform how we think different systems may be differentially affected by potential proxy-orientation processes.

Multitasking

The main insight from psychological, behavioral economics and behavioral ethics research is that both an extrinsic competitive and a moral incentive should be expected to impact decisions 1–5. The traditional economic concept of ‘homo economicus’, a human who exclusively follows her rational self interest has, despite its shortcomings, proven remarkably successful. However, inquiries about potential proxy-orientation within any professional association will quickly reveal, that all their members believe their professional/moral responsibilities are not susceptible to competitive pressures. The truth obviously lies somewhere in between, and there is a growing body of research revealing where on the spectrum an individual decision may fall, depending on situation and character. Recently, Dolly Chugh has suggested that the need to maintain a positive self-image is actually the dominant principle, and that self-interested actions are only permitted to the degree, that this self-image is not endangered 5. To capture this paradigm shift from standard economic views, she suggests to exchange the term bounded rationality with the term bounded ethicality.

An economic approach to capture the simultaneous valuation of two different value dimensions is multitasking theory. Principal-Agent models of multitasking address how a principal (such as an employer) can maximize the output of her agents (the employees) through deriving an optimal contract. The principal is analogous to the societal system, which imposes competition on individuals (agents). Such economic models capture the central aspects of proxyeconomics by combining multitasking and imperfect information in one of the dimensions 6–8. In both approaches effort can be reallocated from a less easily measurable task to a more easily measurable task. A steeper incentive scheme will promote this reallocation, even (or especially) for fully rational decision makers. Such models have been offered as an explanation of why high innovation is frequently associated with flat incentive schemes and why strong competition can lead to increasing short termism, excessive risk taking, increased externalities and other undesirable effects. The main conclusion is that aggregate welfare is hill shaped with respect to incentive strength. This nicely mirrors the information theoretic treatments of Goodharts law mentioned above 9. Proxyeconomics suggests this conclusion may be generalizable to any proxy mediated competitive system.

Psychological profiles

Importantly, the concept of ‘proxy based competition’ has several direct implications for the psychological profiles of decision options. Moore and Loewenstein (2004) provide an early review of such systematic psychological differences under the heading of ‘conflicts of interest’ 10. Specifically, implications for the societal goal are likely to be associated with higher ambiguity, longer timeframes and less personal relevance than implications for the proxy. Indeed, the flourishing research fields around behavioral economics and even more recently behavioral ethics are starting to paint a detailed picture, about how such properties are likely to affect decisions.

Ambiguity

A scientist may be unsure about which statistical model is really most appropriate, simply because it is a difficult question. However, she is far more likely to be able to judge which action will lead to a publication. Importantly, a clear relation exists between the level of ambiguity and the potency of biases to influence decisions. In a pioneering study, Schweitzer and Hsee (2002) found that biased or ‘motivated’ communication was constrained by the ‘uncertainty and vagueness’ of information, even when the presumed costs and benefits of misrepresenting information were held constant 11. Similarly, studies on desirability bias have revealed that events which were more difficult to predict, i.e. more ambiguous, were associated with greater bias 12,13. A more recent body of work from behavioral economics investigates decisions with real payouts in the laboratory. For instance, Mazar et al (2008) investigate cheating, i.e. cases, in which the decision maker actually has sufficient information to decide accurately towards the target variable 2. Interestingly, in these experiments a large majority of participants display a certain ‘moral flexibility’, cheating just a little bit, even in cases where little ambiguity about the correct decision exists. The authors argue that the propensity to cheat is bounded by the desire for maintaining a positive self-concept as honest (self-maintenance theory). Importantly, 2,14 introducing a token that could be exchanged for the monetary reward in an adjacent room instead of directly paying the participants significantly increased cheating. The authors propose that this is due to ‘categorization malleability’, which more easily allows participants to categorize their behavior as honest. Corroborating and extending these conclusions, Chance et al. (2011) introduce a certain level of ambiguity in order to test if subjects self-deceive. Consistent with the findings in COI research, the ambiguity of the task allowed individuals to remain unaware of their moral breaches. Notably, this was the case, even if their self-deception proved costly in the longer term, emphasizing the power of the underlying psychological mechanism 15.

Motivated Reasoning

At the same time the field of economics is adapting ideas from psychology. Benabou and Tirole 16 have elegantly integrated a large range of empirical psychological phenomena into an economic framework in which beliefs are acquired or resisted based on their utility. One important insight from this work is that the psychological mechanisms mediating the influence of a competitive incentive frequently operate outside conscious awareness. This is consistent with a large body of empirical work, in which biases can be statistically demonstrated while the individual decision makers appear to remain unaware of them 17–21. Literature from cognitive psychology addressing motivated reasoning offers some mechanistic insight as to why biases may remain unconscious. Namely, biases have been found to act directly at each step of information processing, including evidence seeking, perception, accumulation and appraisal 22,23. Individuals assimilate new information through a perceptual lens of their existing beliefs, attentional framing and preferred outcome 10,24,25. In ambiguous settings tempting information can create ethical blind spots during perception 1. Furthermore, evidence inconsistent with prior beliefs, or emotional preference, may be scrutinized more extensively 26 or the decision criteria may be adapted 21. Affective responses have been shown to play a significant role in mediating biased evidence assimilation 27–29. This view is consistent with the current neuroscientific understanding of the neural correlates of consciousness, whereby such correlates are proposed to be the final product of large scale subconscious computations 30,31. Accordingly, biases by proximal incentives are likely to be introduced, in large part, outside consciousness. Finally, research on attribution theory has shown that individuals may interpret feedback on good performance in competition as indicative of superior ability rather than increased bias 32.

In addition to the central psychological differences between competitive and moral incentives introduced above, there are numerous other reported psychological effects, which are likely to influence their unequal weighting. For instance, biases may be compounded by high levels of individual creativity 33,34, social recognition of ‘proxy’ accomplishments 15, when individuals compete as part of a team 23,35,36, or during increased cognitive load and personal sacrifice 10,37,38.

Furthermore, the public display of the outcome of competition may serve as a behavioral benchmark, effectively communicating and simultaneously lowering moral norms 4,39. A related effect is the diffusion of being pivotal to a morally undesired outcome 40. Interestingly, winning a competition itself has been suggested to have a detrimental effect on morality in subsequent decisions in certain circumstances 41. Additionally, experimental economic investigations revealed that goal setting (monetary or nonmonetary) can increase unethical behavior 42,43. This effect was particularly strong when individuals fell just short of their goals, a situation that is conceivably frequently experienced in elimination tournaments. Interactions between the incentive trade-off suggested here and such effects are interesting future areas of study.

So in conclusion, we can safely assume that most professionals care not only about their personal benefit, but also about what they view as their moral and professional responsibilities. However, systematic differences between the psychological properties of decision options when proxy and goal are in conflict are likely to be biased towards the proxy. Furthermore, it is likely that much of the bias acts purely subconsciously. In light of this it is particularly interesting, that self-reported biases, as for instance the questionable research practices in surveys, are so prevalent 44.

-

Pittarello, A., Leib, M., Gordon-Hecker, T. & Shalvi, S. Justifications shape ethical blind spots. Psychol. Sci. 26, 794–804 (2015).

-

Mazar, N., Amir, O. & Ariely, D. The Dishonesty of Honest People: A Theory of Self-Concept Maintenance. J. Mark. Res. 45, 633–644 (2008).

-

Hochman, G., Glöckner, A., Fiedler, S. & Ayal, S. ‘I can see it in your eyes’: Biased Processing and Increased Arousal in Dishonest Responses. J. Behav. Decis. Mak. 29, 322–335 (2016).

-

Falk, A. & Szech, N. Morals and markets. Science 340, 707–11 (2013).

-

Chugh, D. & Kern, M. C. A dynamic and cyclical model of bounded ethicality. Res. Organ. Behav. 36, 85–100 (2016).

-

Holmstrom, B. & Milgrom, P. Multitask Principal-Agent Analyses : Incentive Contracts , Asset Ownership , and Job Design. J. Law , Econ. , Organ. 7, 24–52 (1991).

-

Bénabou, R. & Tirole, J. Bonus Culture: Competitive Pay, Screening, and Multitasking. J. Polit. Econ. 124, 305–370 (2016).

-

Baker, G. P. Incentive Contracts and Performance Measurement. J. Polit. Econ. 100, 598–614 (1992).

-

Manheim, D. & Garrabrant, S. Categorizing Variants of Goodhart’s Law. (2018).

-

Moore, D. A. & Loewenstein, G. Self-Interest, Automaticity, and the Psychology of Conflict of Interest. Soc. Justice Res. 17, 189–202 (2004).

-

Schweitzer, M. E. & Hsee, C. K. Stretching the Truth: Elastic Justification and Motivated Communication of Uncertain Information. J. Risk Uncertain. 25, 185–201 (2002).

-

Olsen, R. A. Desirability bias among professional investment managers: some evidence from experts. J. Behav. Decis. Mak. 10, 65–72 (1997).

-

McGregor, D. The major determinants of the prediction of social events. J. Abnorm. Soc. Psychol. 33, 179–204 (1938).

-

Chance, Z., Gino, F., Norton, M. I. & Ariely, D. The slow decay and quick revival of self-deception. Front. Psychol. 6, 1075 (2015).

-

Chance, Z., Norton, M. I., Gino, F. & Ariely, D. Temporal view of the costs and benefits of self-deception. Proc. Natl. Acad. Sci. U. S. A. 108 Suppl, 15655–9 (2011).

-

Bénabou, R. & Tirole, J. Mindful Economics, The Production, Consumption, and Value of Beliefs. J. Econ. Perspect. 30, 141–164 (2016).

-

Orlowski, J. P. & Wateska, L. The effects of pharmaceutical firm enticements on physician prescribing patterns. There’s no such thing as a free lunch. Chest 102, 270–3 (1992).

-

Dana, J. & Loewenstein, G. A social science perspective on gifts to physicians from industry. JAMA 290, 252–5 (2003).

-

MacCoun, R. J. Biases in the interpretation and use of research results. Annu. Rev. Psychol. 49, 259–287 (1998).

-

Coyle, S. L. Physician-industry relations. Part 1: individual physicians. Ann. Intern. Med. 136, 396–402 (2002).

-

Uhlmann, E. & Cohen, G. L. Constructed criteria: redefining merit to justify discrimination. Psychol. Sci. 16, 474–80 (2005).

-

Babcock, L. & Loewenstein, G. Explaining Bargaining Impasse: The Role of Self-Serving Biases. J. Econ. Perspect. 11, 109–126 (1997).

-

Schulz-Hardt, S., Frey, D., Lüthgens, C. & Moscovici, S. Biased information search in group decision making. J. Pers. Soc. Psychol. 78, 655–69 (2000).

-

Kunda, Z. The case for motivated reasoning. Psychol. Bull. 108, 480–98 (1990).

-

Balcetis, E. & Dunning, D. See what you want to see: motivational influences on visual perception. J. Pers. Soc. Psychol. 91, 612–25 (2006).

-

Edwards, K. & Smith, E. E. A Disconfirmation Bias in the Evaluation of Arguments. J. Pers. Soc. Psychol. 71, 5–24 (1996).

-

Munro, G. D. & Ditto, P. H. Biased Assimilation, Attitude Polarization, and Affect in Reactions to Stereotype-Relevant Scientific Information. Personal. Soc. Psychol. Bull. 23, 636–653 (1997).

-

Westen, D., Blagov, P. S., Harenski, K., Kilts, C. & Hamann, S. Neural bases of motivated reasoning: an FMRI study of emotional constraints on partisan political judgment in the 2004 U.S. Presidential election. J. Cogn. Neurosci. 18, 1947–58 (2006).

-

Ditto, P. H. & Lopez, D. F. Motivated skepticism: Use of differential decision criteria for preferred and nonpreferred conclusions. J. Pers. Soc. Psychol. 63, 568–584 (1992).

-

Tononi, G. & Koch, C. The neural correlates of consciousness: an update. Ann. N. Y. Acad. Sci. 1124, 239–61 (2008).

-

Edelman, G. M. & Tononi, G. A Universe of Consciousness. Basic Books (2000).

-

Korn, C. W., Rosenblau, G., Rodriguez Buritica, J. M. & Heekeren, H. R. Performance Feedback Processing Is Positively Biased As Predicted by Attribution Theory. PLoS One 11, e0148581 (2016).

-

Gino, F. & Ariely, D. The dark side of creativity: original thinkers can be more dishonest. J. Pers. Soc. Psychol. 102, 445–59 (2012).

-

Gino, F. & Wiltermuth, S. S. Evil genius? How dishonesty can lead to greater creativity. Psychol. Sci. 25, 973–81 (2014).

-

Gino, F., Ayal, S. & Ariely, D. Self-Serving Altruism? The Lure of Unethical Actions that Benefit Others. J. Econ. Behav. Organ. 93, (2013).

-

Conrads, J., Irlenbusch, B., Rilke, R. M. & Walkowitz, G. Lying and team incentives. J. Econ. Psychol. 34, 1–7 (2013).

-

Greene, J. D., Morelli, S. A., Lowenberg, K., Nystrom, L. E. & Cohen, J. D. Cognitive load selectively interferes with utilitarian moral judgment. Cognition 107, 1144–54 (2008).

-

Sah, S. & Loewenstein, G. Effect of reminders of personal sacrifice and suggested rationalizations on residents’ self-reported willingness to accept gifts: a randomized trial. JAMA 304, 1204–11 (2010).

-

Cialdini, R. B., Reno, R. R. & Kallgren, C. A. A Focus Theory of Normative Conduct: Recycling the Concept of Norms to Reduce Littering in Public Places. J. Pers. Soc. Psychol. 58, 1015–1026 (1990).

-

Falk, A. & Szech, N. Diffusion of Being Pivotal and Immoral Outcomes. Work. Pap. (2016).

-

Schurr, A. & Ritov, I. Winning a competition predicts dishonest behavior. Proc. Natl. Acad. Sci. 113, 201515102 (2016).

-

Ordóñez, L. D., Schweitzer, M. E., Galinsky, A. D. & Bazerman, M. H. Goals Gone Wild: The Systematic Side Effects of Overprescribing Goal Setting. Acad. Manag. Perspect. 23, 6–16 (2009).

-

Schweitzer, M. E., Ordóñez, L. & Douma, B. Goal setting as a motivator of unethical behavior. Acad. Manag. J. 47, 422–432 (2004).

-

Baker, M. 1,500 scientists lift the lid on reproducibility. Nature 533, 452–454 (2016).